Digging into Network Latency

What the hell?? #

You know how it goes….

You’re sitting there….

And then wonder…

Hey…

Why is this SO SLOW?!?!

Well.. I started looking into possible causes…

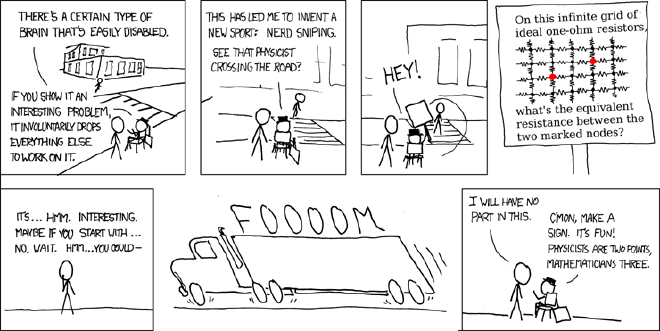

And that’s how I got myself nerd sniped

Fortunately, the wonderful humans over at [calomel.org] have put together a whole lot of really helpful information.

Thanks gang!! In specific, I found their [Freebsd network tuning guide][calomel-fbsdnetwork] Especially helpful!

The Problem I’m noticing #

I noticed that from my OSX desktop, and most of the hosts here, I could download assets significantly faster from El Internetto™ than I could on from my TrueNAS Scale box.

ss to the rescue:

root@pine# ss -i4tdM -f inet -E

Stuff to look at. #

OPNSense tunings #

netstat -idb

netstat -m

loader.conf settings to tweak #

Stuff found on calomel.org #

Disabling hyperthreading

machdep.hyperthreading_allowed="0"

use the improved tcp stream algorythm

net.inet.tcp.soreceive_stream="1"

add the H-TCP congestion control algorithm to loader.conf

cc_htcp_load="YES"

cc_cdg_load="YES"

cc_cubic_load="YES

keyrate="250.34" # keyboard delay to 250 ms and repeat to 34 cps

these settings combined improve the pipeline of packet handling. creates a stream processing pipeline per cpu core and pins them.

net.isr.maxthreads=-1

net.isr.bindthreads="1"

hw.igb.num_queues="0"

hw.igb.enable_msix=1

# qlimit for igmp, arp, ether and ip6 queues only (netstat -Q) (default 256)

#net.isr.defaultqlimit="2048" # (default 256)

# Size of the syncache hash table, must be a power of 2 (default 512)

#net.inet.tcp.syncache.hashsize="1024"

# Limit the number of entries permitted in each bucket of the hash table. (default 30)

#net.inet.tcp.syncache.bucketlimit="100"

#autoboot_delay="-1" # (default 10) seconds

InfoIPC Socket Buffer: the maximum combined socket buffer size, in bytes, defined by SO_SNDBUF and SO_RCVBUF. kern.ipc.maxsockbuf is also used to define the window scaling factor (wscale in tcpdump) our server will advertise. The window scaling factor is defined as the maximum volume of data allowed in transit before the recieving server is required to send an ACK packet (acknowledgment) to the sending server. FreeBSD’s default maxsockbuf value is two(2) megabytes which corresponds to a window scaling factor (wscale) of six(6) allowing the remote sender to transmit up to 2^6 x 65,535 bytes = 4,194,240 bytes (4MB) in flight, on the network before requiring an ACK packet from our server. In order to support the throughput of modern, long fat networks (LFN) with variable latency we suggest increasing the maximum socket buffer to at least 16MB if the system has enough RAM. “netstat -m” displays the amount of network buffers used. Increase kern.ipc.maxsockbuf if the counters for “mbufs denied” or “mbufs delayed” are greater than zero(0). https://en.wikipedia.org/wiki/TCP_window_scale_option https://en.wikipedia.org/wiki/Bandwidth-delay_product

kern.ipc.maxsockbuf=157286400

#enable scaling

net.inet.tcp.rfc1323=1 # (default 1)

net.inet.tcp.rfc3042=1 # (default 1)

net.inet.tcp.rfc3390=1 # (default 1)

#net.inet.tcp.recvbuf_inc=65536 # (default 16384)

net.inet.tcp.recvbuf_max=4194304 # (default 2097152)

net.inet.tcp.recvspace=65536 # (default 65536)

net.inet.tcp.sendbuf_inc=65536 # (default 8192)

net.inet.tcp.sendbuf_max=4194304 # (default 2097152)

net.inet.tcp.sendspace=65536 # (default 32768)

tuning of the jumbo memory buffers is necessary as well:

kern.ipc.nmbjumbop

```sysctl

net.inet.tcp.delayed_ack=1 # (default 1)

net.inet.tcp.delacktime=10 # (default 100)

net.inet.tcp.mssdflt=1460

#net.inet.tcp.mssdflt=1240 # goog's http/3 QUIC spec

#net.inet.tcp.mssdflt=8000

net.inet.tcp.minmss=536 # (default 216)

net.inet.tcp.abc_l_var=44 # (default 2)

net.inet.tcp.initcwnd_segments=44

#selective Ancknowledgement

net.inet.tcp.sack.enable=1

net.inet.tcp.rfc6675_pipe=1 # (default 0)

# use RFC8511 TCP Alternative Backoff with ECN

net.inet.tcp.cc.abe=1 # (default 0, disabled)

net.inet.tcp.syncache.rexmtlimit=0 # (default 3)

#disable syncookies

net.inet.tcp.syncookies=0

# Disable TCP Segmentation Offload

net.inet.tcp.tso=0

increasing the number of packets able to be processed in an interrupt is advisable. The default 0 indicates 16 frames (less than 24kB)

man iflib for more info

dev.ixl.0.iflib.rx_budget=65535

dev.ixl.1.iflib.rx_budget=65535

dev.ixl.2.iflib.rx_budget=65535

dev.ixl.3.iflib.rx_budget=65535

`` kern.random.fortuna.minpoolsize=128

```sysctl

kern.random.harvest.mask=33119

Hardening

kern.ipc.shm_use_phys=1

kern.msgbuf_show_timestamp=1

net.inet.ip.portrange.randomtime=5

net.inet.tcp.blackhole=2

net.inet.tcp.fast_finwait2_recycle=1 # recycle FIN/WAIT states quickly, helps against DoS, but may cause false RST (default 0)

net.inet.tcp.fastopen.client_enable=0 # disable TCP Fast Open client side, enforce three way TCP handshake (default 1, enabled)

net.inet.tcp.fastopen.server_enable=0 # disable TCP Fast Open server side, enforce three way TCP handshake (default 0)

net.inet.tcp.finwait2_timeout=1000 # TCP FIN_WAIT_2 timeout waiting for client FIN packet before state close (default 60000, 60 sec)

net.inet.tcp.icmp_may_rst=0 # icmp may not send RST to avoid spoofed icmp/udp floods (default 1)

net.inet.tcp.keepcnt=2 # amount of tcp keep alive probe failures before socket is forced closed (default 8)

net.inet.tcp.keepidle=62000 # time before starting tcp keep alive probes on an idle, TCP connection (default 7200000, 7200 secs)

net.inet.tcp.keepinit=5000 # tcp keep alive client reply timeout (default 75000, 75 secs)

net.inet.tcp.msl=2500 # Maximum Segment Lifetime, time the connection spends in TIME_WAIT state (default 30000, 2*MSL = 60 sec)

net.inet.tcp.path_mtu_discovery=1 # disable for mtu=1500 as most paths drop ICMP type 3 packets, but keep enabled for mtu=9000 (default 1)

net.inet.udp.blackhole=1 # drop udp packets destined for closed sockets (default 0)

net.inet.udp.recvspace=1048576 # UDP receive space, HTTP/3 webserver, "netstat -sn -p udp" and increase if full socket buffers (default 42080)

#security.bsd.hardlink_check_gid=1 # unprivileged processes may not create hard links to files owned by other groups, DISABLE for mailman (default 0)

#security.bsd.hardlink_check_uid=1 # unprivileged processes may not create hard links to files owned by other users, DISABLE for mailman (default 0)

security.bsd.see_other_gids=0 # groups only see their own processes. root can see all (default 1)

security.bsd.see_other_uids=0 # users only see their own processes. root can see all (default 1)

security.bsd.stack_guard_page=1 # insert a stack guard page ahead of growable segments, stack smashing protection (SSP) (default 0)

security.bsd.unprivileged_proc_debug=0 # unprivileged processes may not use process debugging (default 1)

security.bsd.unprivileged_read_msgbuf=0 # unprivileged processes may not read the kernel message buffer (default 1)

net.inet.raw.maxdgram: 128000

net.inet.raw.recvspace: 128000

net.local.stream.sendspace 128000

net.local.stream.recvspace 128000

kern.ipc.soacceptqueue=2048

increase max threads per process

kern.threads.max_threads_per_proc=9000

tune tcp keepalives

net.inet.tcp.keepidle=10000 # (default 7200000 )

net.inet.tcp.keepintvl=5000 # (default 75000 )

net.inet.tcp.always_keepalive=1 # (default 1)

vfs.read_max=128

Stuff found elsewhere #

Unrelated-ish. found some github issues [4141][powerdns-issue-4141], [5745][powerdns-issue-5745] on powerdns overloading the local net buffers:

131072

net.local.stream.recvspace: 8192

net.local.stream.sendspace: 8192

net.local.dgram.recvspace: 65536

net.local.dgram.maxdgram: 65535

net.local.seqpacket.recvspace: 8192

net.local.seqpacket.maxseqpacket: 8192

hw.hn.vf_transparent: 1

hw.hn.use_if_start: 0

net.link.ifqmaxlen #50 -> 2048 per https://redmine.pfsense.org/issues/10311

Things I tried #

pkg install devcpu-data-intel-20220510

iovctl

ixl driver hw.ixl.rx_itr :The RX interrupt rate value, set to 62 (124 usec) by default.

hw.ixl.tx_itr :The TX interrupt rate value, set to 122 (244 usec) by default.

hw.ixl.i2c_access_method : Access method that driver will use for I2C read and writes via sysctl(8) or verbose ifconfig(8) information display:

0 - best available method 1 - bit bang via I2CPARAMS register 2 - register read/write via I2CCMD register 3 - Use Admin Queue command (default best) Using the Admin Queue is only supported on 710 devices with FW version 1.7 or newer. Set to 0 by default.

hw.ixl.enable_tx_fc_filter : Filter out packets with Ethertype 0x8808 from being sent out by non-adapter sources. This prevents (potentially untrusted) software or iavf(4) devices from sending out flow control packets and creating a DoS (Denial of Service) event. Enabled by default.

- hw.ixl.enable_head_writeback

- When the driver is finding the last TX descriptor processed by the hardware, use a value written to memory by the hardware instead of scanning the descriptor ring for completed descriptors. Enabled by default; disable to mimic the TX behavior found in ixgbe(4).

SYSCTL PROCEDURES

dev.ixl.#.fc

Sets the 802.3x flow control mode that the adapter will advertise on the link. The negotiated flow control setting can be viewed in the interface’s media field if ifconfig(8)

- 0 Disables flow control

- 1 is RX

- 2 is TX pause

- 3 enables full

dev.ixl.#.advertise_speed Set the speeds that the interface will advertise on the link. dev.ixl.#.supported_speeds contains the speeds that are allowed to be set.

dev.ixl.#.current_speed Displays the current speed.

dev.ixl.#.fw_version Displays the current firmware and NVM versions of the adapter.

dev.ixl.#.debug.switch_vlans

Set the Ethertype used by the hardware itself to handle internal services.

Frames with this Ethertype will be dropped without notice.

Defaults to 0x88a8, which is a well known number for IEEE 802.1ad VLAN stacking.

If you need 802.1ad support, set this number to any another Ethertype i.e. 0xffff.

INTERRUPT STORMS It is important to note that 40G operation can generate high numbers of interrupts, often incorrectly being interpreted as a storm condition in the kernel. It is suggested that this be resolved by setting:

hw.intr_storm_threshold: 0

IOVCTL OPTIONS The driver supports additional optional parameters for created VFs (Virtual Functions) when using iovctl(8):

mac-addr (unicast-mac)

Set the Ethernet MAC address that the VF will use. If

unspecified, the VF will use a randomly generated MAC address.

mac-anti-spoof (bool)

Prevent the VF from sending Ethernet frames with a source address

that does not match its own.

allow-set-mac (bool)

Allow the VF to set its own Ethernet MAC address

allow-promisc (bool)

Allow the VF to inspect all of the traffic sent to the port.

num-queues (uint16_t)

Specify the number of queues the VF will have. By default, this

is set to the number of MSI-X vectors supported by the VF minus

one.

An up to date list of parameters and their defaults can be found by using

iovctl(8) with the -S option.

#

Tests``` Result 1

Interface lagg0_vlan2 Start Time 2022-07-04 20:12:10 -0500 Port 27449 General

Time Tue, 05 Jul 2022 01:14:37 UTC Duration 30 Block Size 131072 Connection

Local Host 192.0.2.1 Local Port 27449 Remote Host 192.0.2.23 Remote Port 42218 CPU Usage

Host Total 78.54 Host User 14.81 Host System 63.75 Remote Total 0.00 Remote User 0.00 Remote System 0.00 Performance Data

Start 0 0 End 30.000273 30.000273 Seconds 30.000273 30.000273 Bytes 0 36067520404 Bits Per Second 0 9617917918.01361

PINETEST: iperf 3.9 PINETEST: PINETEST: Linux pine 5.15.45+truenas #1 SMP Fri Jun 17 19:32:18 UTC 2022 x86_64 Control connection MSS 9044 PINETEST: Time: Tue, 05 Jul 2022 01:14:37 GMT PINETEST: Connecting to host 192.0.2.1, port 27449 PINETEST: Cookie: 6yl23mxmlzitkz3wwertio5zl2nqcw6g553a PINETEST: TCP MSS: 9044 (default) PINETEST: [ 5] local 192.0.2.23 port 42218 connected to 192.0.2.1 port 27449 PINETEST: Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 30 second test, tos 0 PINETEST: [ ID] Interval Transfer Bitrate Retr Cwnd PINETEST: [ 5] 0.00-1.00 sec 1.15 GBytes 9.88 Gbits/sec 0 1.99 MBytes PINETEST: [ 5] 1.00-2.00 sec 1.15 GBytes 9.83 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 2.00-3.00 sec 1.13 GBytes 9.72 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 3.00-4.00 sec 1.14 GBytes 9.75 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 4.00-5.00 sec 1.10 GBytes 9.42 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 5.00-6.00 sec 1.02 GBytes 8.76 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 6.00-7.00 sec 1.08 GBytes 9.31 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 7.00-8.00 sec 1.09 GBytes 9.32 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 8.00-9.00 sec 1.12 GBytes 9.60 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 9.00-10.00 sec 1.15 GBytes 9.88 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 10.00-11.00 sec 1.15 GBytes 9.89 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 11.00-12.00 sec 1.15 GBytes 9.86 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 12.00-13.00 sec 1.15 GBytes 9.83 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 13.00-14.00 sec 1.15 GBytes 9.86 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 14.00-15.00 sec 1.11 GBytes 9.50 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 15.00-16.00 sec 1.10 GBytes 9.43 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 16.00-17.00 sec 1.13 GBytes 9.70 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 17.00-18.00 sec 1.14 GBytes 9.83 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 18.00-19.00 sec 1.15 GBytes 9.87 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 19.00-20.00 sec 1.15 GBytes 9.86 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 20.00-21.00 sec 1.08 GBytes 9.26 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 21.00-22.00 sec 1.13 GBytes 9.70 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 22.00-23.00 sec 1.13 GBytes 9.69 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 23.00-24.00 sec 1.05 GBytes 9.03 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 24.00-25.00 sec 1.06 GBytes 9.10 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 25.00-26.00 sec 1.15 GBytes 9.86 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 26.00-27.00 sec 1.15 GBytes 9.86 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 27.00-28.00 sec 1.14 GBytes 9.80 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 28.00-29.00 sec 1.09 GBytes 9.39 Gbits/sec 0 2.09 MBytes PINETEST: [ 5] 29.00-30.00 sec 1.14 GBytes 9.78 Gbits/sec 0 2.09 MBytes PINETEST: - - - - - - - - - - - - - - - - - - - - - - - - - PINETEST: Test Complete. Summary Results: PINETEST: [ ID] Interval Transfer Bitrate Retr PINETEST: [ 5] 0.00-30.00 sec 33.6 GBytes 9.62 Gbits/sec 0 sender PINETEST: [ 5] 0.00-30.00 sec 33.6 GBytes 9.62 Gbits/sec receiver PINETEST: CPU Utilization: local/sender 52.7% (1.3%u/51.4%s), remote/receiver 78.5% (14.8%u/63.7%s) PINETEST: snd_tcp_congestion cubic PINETEST: rcv_tcp_congestion newreno PINETEST: PINETEST: iperf Done.

[xkcd-nerdsnipe-img]: <https://imgs.xkcd.com/comics/nerd_sniping.png>

[xkcd-nerdsnipe-comic]: <https://xkcd.com/356>

[calomel.org]: <https://calomel.org>

[cloudflare-fragpost]: <https://blog.cloudflare.com/ip-fragmentation-is-broken/>

[icmptest]: <http://icmpcheck.popcount.org>

[powerdns-issue-5745]: <https://github.com/PowerDNS/pdns/issues/5745>

[powerdns-issue-4141]: <https://github.com/PowerDNS/pdns/issues/4141>

[pfsense-redmine-vf-settings]: <https://redmine.pfsense.org/issues/9647>

[pfsense-forums-vf-settings]: <https://forum.netgate.com/topic/169884/after-upgrade-inter-v-lan-communication-is-very-slow-on-hyper-v/50?lang=en-US>